Starting from the basics: what is Phi-3?

Microsoft researchers have developed a new class of small language models (SLMs) called Phi-3. These models offer many of the capabilities of large language models (LLMs) but are significantly smaller and more efficient. The Phi-3-mini model, with 3.8 billion parameters, outperforms models of similar size and even some larger ones in various benchmarks for language, coding, and math tasks.

Accessible AI with Phi-3 models

Phi-3 models are designed to be more accessible and easier to use, especially for organisations with limited resources. They are particularly suitable for tasks that can run locally on a device, minimising latency and maximising privacy. This makes them ideal for quick-response scenarios or where data must stay on-premises, such as in regulated industries or rural areas without reliable network access.

Innovative Training Methods

The development of these models was inspired by children’s learning processes. Microsoft researchers used high-quality, selectively chosen data to train them. They created a discrete dataset starting with 3,000 words and generated millions of tiny children’s stories. This approach, called “TinyStories”, enabled Microsoft to create efficient models without compromising performance.

Availability and Expansion

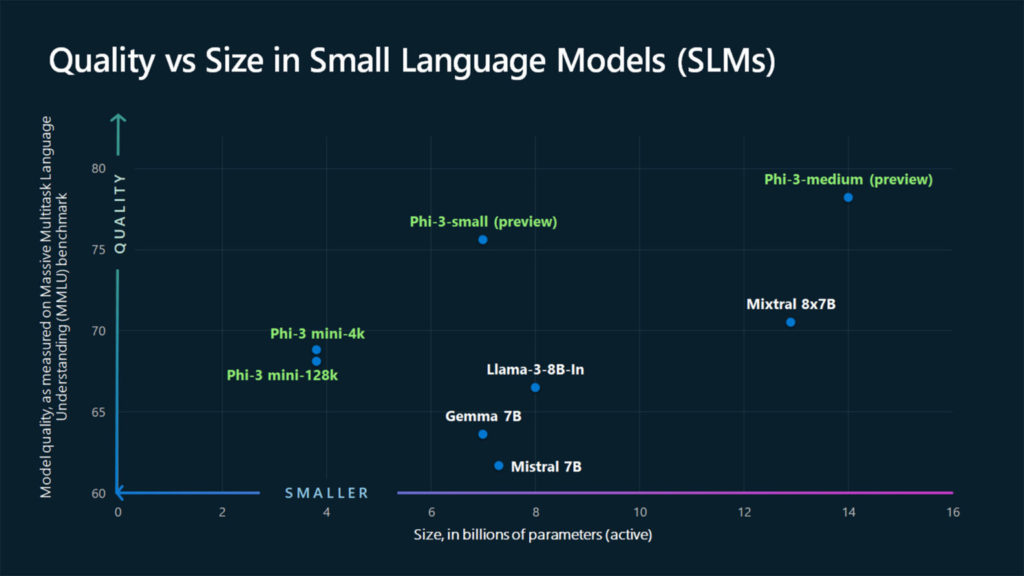

Starting with Phi-3-mini, these models are now available in the Microsoft Azure AI Model Catalog, on Hugging Face, and as NVIDIA NIM microservices. Additional models in the Phi-3 family, such as Phi-3-small (7 billion parameters) and Phi-3-medium (14 billion parameters), will be available soon. This expansion offers more choices across quality and cost.

Graphic illustrating how the quality of new Phi-3 models, as measured by performance on the Massive Multitask Language Understanding (MMLU) benchmark, compares to other models of similar size (Source: Microsoft).

“What we’re going to start to see is not a shift from large to small, but a shift from a singular category of models to a portfolio of models where customers get the ability to make a decision on what is the best model for their scenario” — Sonali Yadav, Principal Product Manager for Generative AI at Microsoft.

Complementing Large Language Models

SLMs are not intended to replace LLMs. Instead, they complement them by handling simpler tasks efficiently. This allows LLMs to focus on more complex queries. This combination provides a versatile portfolio of models for different needs, balancing performance, cost, and accessibility.

Practical applications

SLMs can power a range of applications. These include summarizing long documents, generating marketing content, supporting chatbots, and enabling offline AI experiences. By making AI more accessible, Microsoft aims to empower more people and organisations to use AI in innovative ways.

Future outlook

Microsoft researchers believe that both small and large models will coexist. Each will serve distinct roles based on task complexity and resource availability. This approach ensures that AI technology remains adaptable and scalable, meeting diverse needs across various sectors.

Stay curious, stay connected. Find more real-world and useful content like this “Microsoft’s Phi-3: Revolutionising AI with efficient and accessible small language models” on our blog.

0 Comments